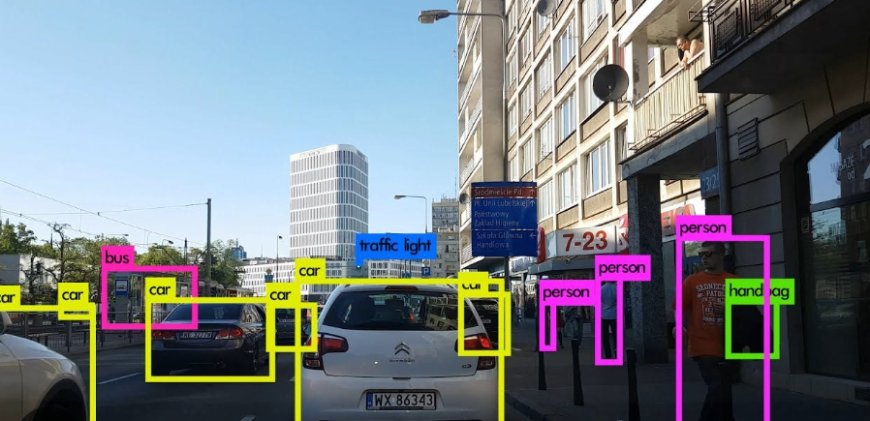

Object detection

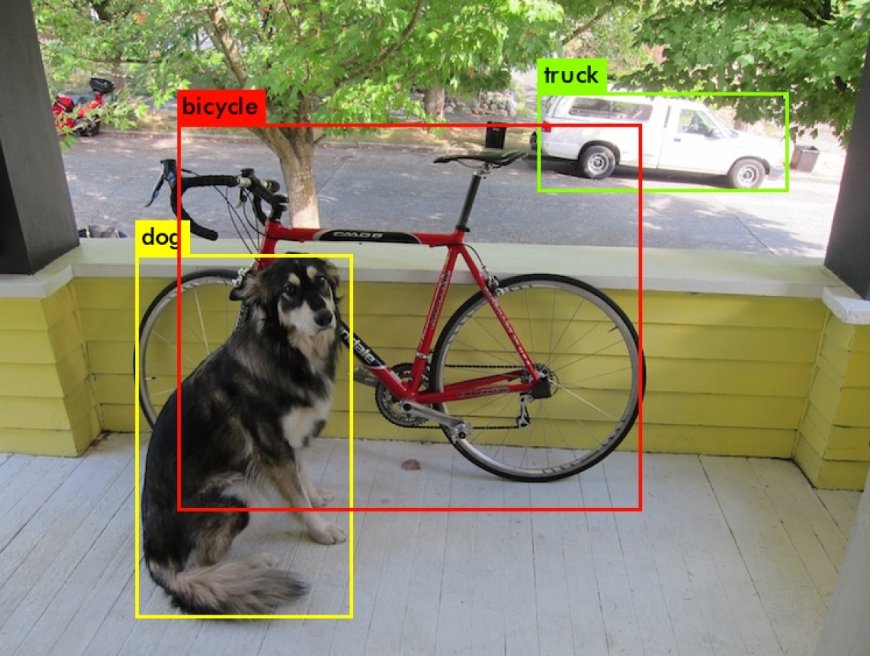

Object detection is to locate the object via bounding boxes (BBs) and also give the classification of the located objects.

https://pjreddie.com/darknet/yolo/

-

We are using the pretrained YOLO model trained on COCO dataset

-

First we load the pre-trained YOLO model using Deep Neural Network library of OpenCV.

-

Convert the frame to a blob

-

Process the output and draw bounding boxes

-

Object detected using the pretrained weights and dnn(Deep neural library) library of openCV.

-

Display the resulting frame.

Proof of Concept Document: Real-time Object Detection using YOLO

Objective:

The goal of this Proof of Concept (POC) is to demonstrate real-time object detection using the YOLO (You Only Look Once) deep learning model. The implementation utilizes the OpenCV library in Python.

Technologies Used:

-

OpenCV (cv2): A popular computer vision library that provides tools for image and video analysis.

-

Deep Neural Network Libraries of OpenCV.

-

NumPy: A library for numerical operations in Python, used for handling arrays and matrices.

Code Overview:

The provided Python script uses the YOLOv3 model to perform real-time object detection on a webcam feed. Here is a brief overview of the key components:

-

Loading YOLO Model:

-

The YOLO model is loaded using cv2.dnn.readNet, specifying the path to the model weights (yolov3.weights) and configuration file (yolov3.cfg).

-

The COCO class names are loaded from the file coco.names.

-

Webcam Capture:

-

The script captures video frames in real-time using the OpenCV VideoCapture object (webcam with index 0).

-

Object Detection:

-

Each frame is preprocessed into a blob using cv2.dnn.blobFromImage.

-

The blob is fed into the YOLO model using net.setInput(blob), and the output is obtained with net.forward(layer_names).

-

The output is processed to identify objects with confidence greater than 0.5.

-

Non-maximum suppression (cv2.dnn.NMSBoxes) is applied to filter out overlapping bounding boxes.

-

Drawing Bounding Boxes:

-

Bounding boxes are drawn around detected objects on the frame.

-

The class label, confidence score, and box coordinates are displayed for each detected object.

-

Display and Exit:

-

The resulting frame with bounding boxes is displayed in a window titled "Real-time Object Detection."

-

The script continues to run until the user presses 'q', at which point the video capture is released, and the OpenCV window is closed.

Execution:

To run the script, make sure you have the YOLOv3 weights, configuration file, and COCO class names file in the same directory as the script. Execute the script, and a window will open showing real-time object detection from your webcam feed.

Conclusion:

This Proof of Concept demonstrates a simple yet effective implementation of real-time object detection using the YOLOv3 model and OpenCV. It serves as a foundation for building more advanced computer vision applications and can be extended for various use cases.

What's Your Reaction?